Why does responsible AI matter? Guidelines for securing your AI future:

How to build on established cybersecurity frameworks to protect against the latest AI threats

Artificial intelligence (AI) is rapidly transforming industries, but its power comes with responsibility. Despite the significant progress made in AI and machine learning (ML) domains, these technologies are vulnerable to attacks, leading to serious consequences — such as bad actors “poisoning” AI systems to malfunction and corrupting data for misuse.

Imagine the fallout if a self-driving car misinterprets road signs due to a subtle attack, or if a medical AI system misdiagnoses patients due to biased data. These are not theoretical concerns — they expose a fundamental truth about AI: it can be as vulnerable as any other technology.

“When the pace of development is high — as is the case with AI — security can often be a secondary consideration,” according to the National Cyber Security Centre. “Security must be a core requirement, not just in the development phase, but throughout the lifecycle of the system.”

And while security measures, including data security and privacy, are a necessary concern when it comes to AI, there are also frameworks and principles to consider so you deploy AI responsibly and within corporate governance.

What is responsible AI?

Responsible AI (RAI) encompasses the safe and ethical development and deployment of AI technologies, enabling trust, fairness, security, and legal compliance. As AI innovation accelerates rapidly, these guardrails are essential to secure, govern, and manage the use and deployment of AI, and prevent AI from causing harm or replicating real-world biases.

Microsoft has defined six essential principles to guide the responsible use and development of AI systems: fairness, reliability and safety, privacy and security, inclusiveness, transparency, and accountability. Called the Responsible AI Standard, this framework is Microsoft’s “cornerstone of a responsible and trustworthy approach to AI, especially as intelligent technology becomes more prevalent in products and services that people use every day.”

“Trustworthy AI systems are those demonstrated to be valid and reliable; safe, secure and resilient; accountable and transparent; explainable and interpretable; privacy-enhanced; and fair with harmful bias managed,” per the National Institute of Standards and Technology (NIST), part of the U.S. Department of Commerce.

To help you build your responsible AI practice, we’ll break down what constitutes an AI system (what you’re securing), the latest threats (what you’re protecting against), and how to protect an AI system.

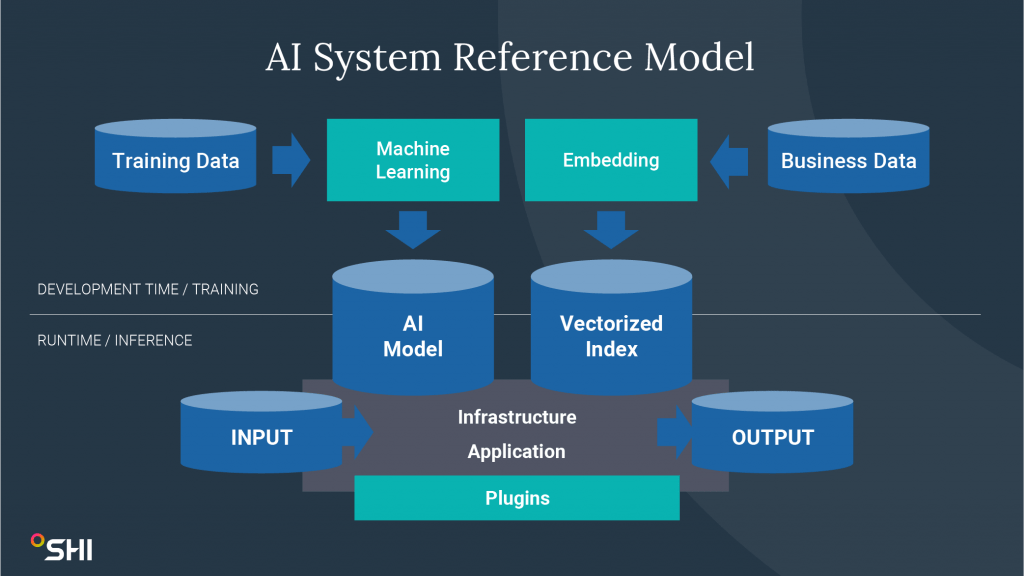

What is an AI system?

An AI system is an intelligent, machine-based system, simulating human problem-solving processes and capabilities. There are two sides working together to create an effective system.

Runtime/inference involves the actual operation of AI applications and models, including their deployment, monitoring, and interaction with users or other systems. Development and training cover the process of creating, tuning, or augmenting AI models, which includes gathering data, training models, testing, and refining algorithms.

Both sides of an AI system include unique risks and require specific security and responsible AI practices to mitigate them.

AI threats and adversarial machine learning

Building and deploying AI systems present known and emerging threats to your organization. Adversarial machine learning (AML) is an essential resource to inform your AI cybersecurity strategy; AML is a burgeoning field studying the capabilities and goals of attackers who exploit vulnerabilities during the development, training, and deployment phases of the ML lifecycle.

While this list is not exhaustive, NIST recognizes four major types of attacks to be aware of.

Evasion attacks

Evasion attacks are strategies where attackers create special inputs which can trick a system into misclassifying them with just tiny changes. These examples look almost normal but can make the system think they belong to any category the attacker chooses, such as markings added to stop signs causing an autonomous vehicle to view as speed limit signs instead, according to NIST.

Poisoning attacks

Poisoning attacks are adversarial attacks during the training stage of the ML algorithm, introducing corrupted data. They cause either an availability violation, which degrades the ML model’s performance, or an integrity violation, which aims to produce incorrect predictions.

Privacy attacks

Privacy attacks occur during deployment, learning and targeting sensitive information about the AI and its data to misuse and alter the AI’s behavior. The goal is to reverse engineer private information about an individual user record or sensitive critical infrastructure data. “Adding undesired examples to those online sources could make the AI behave inappropriately, and making the AI unlearn those specific undesired examples after the fact can be difficult,” explained NIST.

Abuse attacks

Generative AI introduces a new category of attacker goals: abuse violations. This broadly refers to when an attacker repurposes a system’s intended use to achieve their own objectives, inserting incorrect information into a legitimate but compromised online source for AI to absorb.

How to protect your AI systems

Are AI-driven threats and security measures new problems or age-old challenges? The good news is that effective, conventional cybersecurity controls apply to AI systems the same as any other security area.

Don’t reinvent your entire cybersecurity practice — start with what you know works. A strong identity, data, and threat management practice is an excellent foundation for a responsible and secure AI practice. Follow the principles of a trusted cybersecurity framework like NIST or Center for Internet Security (CIS), with a heightened focus on identity and access management, data-centric security, and deep visibility and reporting.

Considering how these principles apply to the runtime/production of AI systems, our experts recommend several mitigation strategies to enable responsible and secure deployment, operation, and use.

- Apply standard DevOps and application security best practices.

- Implement standard infrastructure best practices via secure landing zones.

- Tighten data retention policies.

- Limit the impact of AI by minimizing privileges and adding oversight, e.g., guardrails and human oversight.

- Implement AI governance.

- Improve regular application and system security by understanding AI particularities, e.g., model parameters need protection, and access to the model needs to be monitored and rate-limited.

On the development/training side, you can safeguard the secure development of AI systems by implementing the following mitigation techniques.

- Leverage foundation models.

- Customize/bring your own data into models using lower-risk techniques like retrieval augmented generation (RAG).

- Specialize models as much as possible.

- Obfuscate data.

- Minimize data as much as possible.

- Extend security and development practices to include data science activities, especially to protect and streamline the engineering environment.

- Explore countermeasures in data science by understanding model attacks, e.g., data quality assurance, larger training sets, detecting common perturbation attacks, and input filtering.

SHI’s AI solutions can help secure your journey

Incorporating AI solutions can transform your organization — automating processes, optimizing workflows, and unleashing a competitive edge.

Harness the power of AI while navigating the risks with our expert team. To prevent a devastating AI-powered breach, start with SHI’s Security Posture Review. We’ll also help build a secure cloud foundation based on your specific needs, followed by our private AI development solutions designed around your governance, sovereignty, and privacy standards. For proven, tailored insights, SHI’s AI & Cyber labs offer a space to collaborate with data scientists on small-scale projects before scaling.

Whether you need enterprise-wide AI deployment and integration services, or a strategy to kickstart and roadmap your AI objectives, we can help. To get started, book an AI Executive Review with our team, a smart first step to ensuring a successful, secure, and responsible AI future.