How to build AI-ready data centers that cut back on energy consumption:

Energy-demanding AI shouldn’t put a stop to your sustainable infrastructure goals.

Artificial intelligence (AI) has pervaded nearly every aspect of IT – from the devices we use to perform everyday tasks to the agents troubleshooting our common issues. But as your AI use cases increase, so do the power and cooling demands of your data center.

In fact, according to the United Nations Environment Programme, AI’s energy demands are so great that a single large language model (LLM) query requires 2.9 watt-hours of electricity, versus just 0.3 watt-hours for a regular internet search. This is a nearly tenfold increase in energy consumption.

Indeed, the AI takeover leaves organizations in quite the quandary – how can you reap the full benefits of AI without overloading your data center and exacerbating your greenhouse gas (GHG) emissions?

1. Avoid unintended AI sprawl

The simplest and easiest way to avoid an energy crisis in your data center? Deploy AI not as a kneejerk solution for anything and everything, but as a tactical solution for specific problems and use cases that will truly benefit your end users.

It’s surprisingly easy to fall down the AI use case rabbit hole: a chatbot for every webpage, an agent for every support category, a generative AI platform for every employee. Remember your personas, the problems you need AI to solve, and the use cases most important to your organization.

When you deploy AI strategically and methodically, you can avoid the kind of AI sprawl that would send your energy consumption levels into the stratosphere.

2. Optimize your data center cooling

If you’re like most organizations, you likely built your data center with the mentality of 100% cooling 100% of the time. And why wouldn’t you? You need your servers running at optimal temperatures, or else you risk application slowdowns or worse: frying your server internals.

While high-use servers do need that constant cooling, those operating at a lower intensity may not. Take the time to monitor your data center on a server-by-server level and ask yourself: does rack A need the same liquid cooling as rack B? If racks C and D are only at peak use three hours per day, do they need the same constant cooling as A and B?

If you can tweak your HVAC and liquid cooling while still maintaining optimal server temperatures, you can save valuable energy. And in the case of liquid cooling, you can also help your locality conserve precious water.

3. Refresh your servers with AI in mind

Unlike many traditional applications, AI platforms and solutions heavily tax a server’s GPU. Neural processing units (NPUs) can offset this demand, though these are typically only configured in devices deployed within the past couple years.

This means if your servers are years old or outfitted for CPU-intensive applications, they likely don’t have the GPU or NPU capacity to handle AI workloads.

Whether through total refreshes or rebuilds and upgrades, consider future-proofing your data center with the kind of hardware that will sustain your AI journey over the long term. Doing so won’t just enable AI workloads today but will continue to operate efficiently and effectively as AI use cases grow more common and more complex.

4. Test server configurations before you buy

Remember: building out an AI-ready data center that’s future-proof without going overboard is a delicate balancing act. After all, an easy way to make your energy waste spiral out of control is to over-configure with server hardware far beyond your actual needs or capacity. In doing so, you exacerbate the power needed to cool your servers, as well as the heat produced by powerful, demanding components.

That’s why it’s important to test configurations before you buy. Carry out a bench-test simulation to gain a true understanding of a server’s capacity and cooling requirements, then compare those to your needs and capabilities before making any deployment decisions. Doing so will let you plan with pinpoint accuracy how you intend to power your new servers and reuse the heat they radiate.

Future-proof data centers can help reduce scope 1, 2, and 3 emissions

Your data center’s power and cooling needs are large and important parts of your overall carbon footprint. But if you take a step back, you’ll see the environmental costs of a data center are far, far wider than electricity usage – making it all the more important to build a truly future-proof data center.

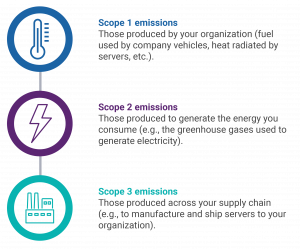

We’ve already discussed the water needed to cool your servers. But what about the precious metals needed to build your components? Or the fossil fuels used to power OEM factories? We call these scope 1, scope 2, and scope 3 emissions. And while many of them seem largely out of your hands, making long-term considerations now can dictate your organization’s impact for years to come.

What are scope 1 emissions?

Scope 1 emissions are the easiest to understand because we see them nearly every day. They’re the emissions directly produced by your organization’s actions and operations.

Think of the heat radiated by your servers. According to Data Center Knowledge, 98% of the energy data centers consume is wasted as heat – meaning only 2% is used to power your business-critical applications.

Where does that energy go? Does your organization have a way to repurpose that heat, or is it left to dissipate into the environment? And if you’re using liquid cooling, do you have the means to recycle or reuse the used, hot water in a way that isn’t harmful to your local ecosystem?

The Swiss company Infomiak, for example, has found a way to heat homes in its base city of Geneva with the heat emitted by their data centers, showing the real potential of how we can recycle our scope 1 emissions to benefit our communities.

What are scope 2 emissions?

Speaking of electricity, scope 2 emissions are the indirect emissions caused by the production of the energy your data center requires.

If, for example, the roof of your headquarters is lined with solar panels, you might produce enough renewable energy to power and cool your data center without needing energy from a public utility. But if you rely on public utilities, you’re likely drawing from nonrenewable pollutants.

In the U.S., natural gas, oil, and coal account for nearly 60% of all electric generation owned by public power companies. If you rely on these companies to power your data center, it’s all the more important you find ways to optimize your energy consumption.

What are scope 3 emissions?

As we zoom out even further from our data center, we see scope 3 emissions – those produced within our supply chain.

These include the mining of precious metals needed to build our components, the fossil fuels used to power the factories that manufacture our devices, and the GHGs produced to ship our hardware from the factory to our data center via ship, plane, train, and/or truck.

How can we reduce our scope 3 emissions? By making hardware last. When you build your AI-ready data center, it’s important to test and evaluate platforms and solutions so you know not just what will work today, but what you’ll need for the years ahead.

Prepare your AI-ready data center at SHI’s AI & Cyber Labs

Maintaining control of your GHG emissions in the age of AI means building and scaling an AI-ready data center that can perform intensive tasks without going into overdrive. You can start by understanding the resource needs of the AI solutions you need to deploy.

At our AI & Cyber Labs, you can test and validate AI solutions in a secure, production-grade environment so you know exactly what to expect before you deploy. Develop your AI use cases, stress-test solutions against real-world workflows, data, and security requirements, then adjust as needed to build your ideal solution.

Once your AI journey is refined through our AI & Cyber Labs, you’ll have a greater understanding of what you need to build out a future-proof data center that’s ready for whatever AI workflows you’ll throw at it.

And because you’ll know the ins and outs of your modernized servers, you should see smooth sailing as you optimize your data center to reduce energy consumption and greenhouse gas emissions.

Are you ready to avoid untamed energy consumption with an AI-ready data center? Explore our AI & Cyber Labs or contact our experts today.