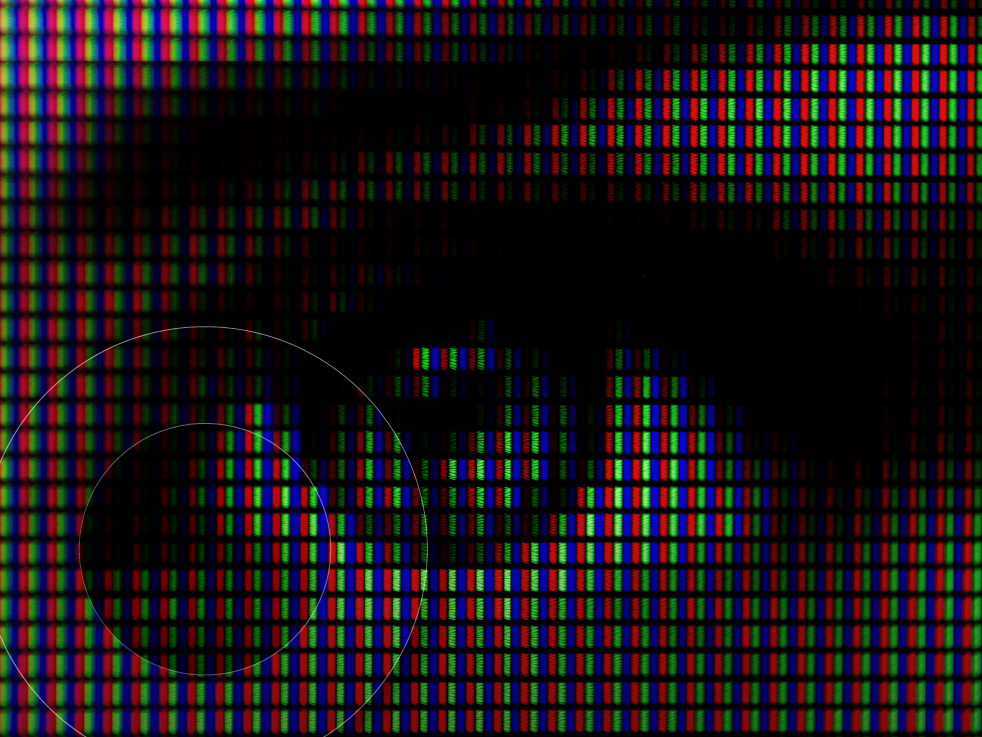

Innovation Heroes: The deepfake that swindled a company out of $25 million:

AI-driven deepfakes are taking phishing to a whole new level. Here's how it cost one company millions.

Picture this: You’re wrapping up what seemed like a routine video conference. Your CFO was there, along with several colleagues. The audio was clear, the video sharp, and everyone looked and sounded exactly as they should. Then comes an urgent follow-up message about a wire transfer discussed during the call. High stakes. Time-sensitive. You act.

Twenty-five million dollars later, the devastating truth emerges: You were the only real person on that call.

This isn’t the plot of a sci-fi thriller — it’s exactly what happened to an employee at a Hong Kong-based company, marking one of the most sophisticated cybercrime operations in history.

The incident represents a watershed moment in how we think about trust, technology, and the very nature of human interaction in our digital world.

The new reality of synthetic trust

The Hong Kong deepfake heist wasn’t just about advanced AI technology; it was about understanding human psychology at an industrial scale. As cybersecurity expert and CISO Advisor at KnowBe4 Erich Kron explains in the latest episode of Innovation Heroes, what made this attack so devastatingly effective wasn’t just the quality of the deepfakes, but how the criminals structured the entire operation.

“What happened here is they got around that by simply scripting the entire call and they created the deepfakes offline and basically ran this kind of like a movie, if you will,” Kron says. “And the person who was brought into it, who ended up wiring the money, he was the only person who wasn’t part of this deepfake charade.”

The attackers didn’t rely on real-time deepfake technology, which still has limitations. Instead, they pre-recorded a scripted “movie” featuring synthetic versions of company executives, then engineered a technical “glitch” that ended the call at just the right moment. The follow-up request came via traditional channels, lending an air of authenticity that was impossible to question.

Cybercrime, Inc.: When fraud becomes enterprise-scale

What’s perhaps most alarming about this case is how it reflects the broader industrialization of cybercrime. Kron cites Bloomberg research that found if global cybercrime were treated as a country’s GDP, it would rank third in the world, behind only the United States and China.

“Ransomware developers are in charge of the infrastructure and most of the time the payment infrastructure… They’re focused on that. Well, what that does is allow them to focus on that while they have affiliates,” Kron says.

This isn’t your stereotypical image of hoodie-wearing hackers in basement offices. Modern cybercrime operates with the sophistication of Fortune 500 companies, complete with specialized departments, profit-sharing arrangements (typically 70% to affiliates, 30% to developers), and, in some operations, even HR departments.

The Hong Kong attack required extensive reconnaissance to identify the right target: someone with the authority to authorize large transfers without additional approvals. The criminals had to research company executives, harvest video and audio samples from public sources, and coordinate a complex multi-stage operation. This level of planning and execution represents a fundamental shift in how we should think about cybersecurity threats.

The psychology behind the con

Beyond the technological sophistication lies a deeper understanding of human psychology. Kron explains how these attacks exploit what behavioral economist Daniel Kahneman calls “System 1 thinking:” our brain’s automatic, energy-conserving mode that handles routine tasks and emotional responses.

“These bad actors use emotions to drive us into system 1 thinking. System one thinking is where we make mistakes because it’s not critical thinking,” he says.

When we’re under pressure, stressed, or operating in familiar patterns, we’re most vulnerable to deception. The Hong Kong victim had participated in what appeared to be a normal company meeting with familiar faces and voices. When the technical “glitch” ended the call, the subsequent request felt like a natural continuation of business discussed minutes earlier.

This psychological manipulation isn’t new. It’s the same principle behind decades of phone scams and email phishing. What’s changed is the scale and sophistication with which criminals can now deploy these tactics, leveraging AI to create previously impossible levels of authenticity.

Fighting fire with fire: AI-powered defense

The rise of AI-powered attacks demands AI-powered defenses. Organizations are increasingly turning to machine learning (ML) systems that can detect anomalous behavior patterns, analyze communication for signs of social engineering, and flag suspicious activities before damage occurs.

However, as Kron emphasizes, technology alone isn’t enough. The human element remains both the weakest link and the strongest defense in cybersecurity. Effective security programs must focus on changing behavior, not just raising awareness.

The most promising approaches combine automated detection with human education, teaching people to recognize the emotional manipulation tactics that drive them into vulnerable “system 1 thinking” and providing clear protocols for verifying high-stakes requests through independent channels.

The path forward

The Hong Kong deepfake incident offers a stark preview of our digital future. As synthetic media becomes more sophisticated and accessible, the traditional markers we use to establish trust — familiar faces, known voices, consistent communication patterns, etc. — become unreliable.

Organizations must move beyond checkbox compliance approaches to cybersecurity awareness and invest in comprehensive human risk management programs. This means regular training that goes beyond phishing simulations to address the psychological tactics used in social engineering attacks.

That’s where SHI and KnowBe4 can help. Together, we strengthen human risk management through KnowBe4’s AI-driven platform and SHI’s personalized tech integration capabilities. When you work with us, you unlcok seamless access to security training, compliance modules, and adaptive defense tools.

NEXT STEPS

Learn more about KnowBe4’s AI-driven human risk management platform and listen to the full conversation here. You can also find episodes of the Innovation Heroes podcast on SHI’s Resource Hub, Spotify, and other major podcast platforms, as well as on YouTube in video format.

Video + audio

Audio only